How To Build a Durable AI Agent with Temporal and Python

Introduction

An AI agent uses large language models (LLMs) to plan and execute steps towards a goal. While attempting to reach its goal, the agent can perform actions such as searching for information, interacting with external services, and even calling other agents. However, building reliable AI agents presents various challenges. Network failures, long-running workflows, observability challenges, and more make building AI agents a textbook distributed systems problem.

Temporal orchestrates long-running workflows, automatically handles failure cases from network outages to server crashes, provides insights into your running applications, and more. These features provide the resiliency and durability necessary to build reliable agents that users can rely on.

In this tutorial you'll build an AI agent using Temporal that searches for events in a given city, helps you book a plane ticket, and creates an invoice for the trip. The user will interact with this application through a chatbot interface, communicating with the agent using natural language. Throughout this tutorial you will implement the following components:

- Various tools the agent will use to search for events, find flights, and generate invoices.

- An agent goal that will specify what overall task the agent is trying to achieve and what tools it is allowed to use.

- Temporal Workflows that will orchestrate multi-turn conversations and ensure durability across failures

- Temporal Activities that execute tools and language model calls with automatic retry logic

- A FastAPI backend that connects the web interface to your Temporal Workflows

- A web-based chat interface that allows users to interact with the agent

By the end of this tutorial, you will have a modular, durable AI agent that you can extend to run any goal using any set of tools. Your agent will be able to recover from failure, whether it's a hardware failure, a tool failure, or an LLM failure. And you'll be able to use Temporal to build reliable AI applications that maintain state and provide consistent user experiences.

You can find the code for this tutorial on GitHub in the tutorial-temporal-ai-agent repository.

Prerequisites

Before starting this tutorial, ensure that you have the following on your local machine:

Required

- The Temporal CLI development service installed and verified.

- Python 3.9 or higher installed.

Verify your installation by running

python3 --versionin your terminal. - The

uvpackage and project manager installed.uvis a modern, fast Python package manager that will handle virtual environments and dependencies. - The command line tool curl installed for downloading certain files.

- Node.js 18 or higher installed.

You can verify your installation with

node --versionandnpm --version. - An OpenAI API key saved securely where you can access it. You may need to create an OpenAI account first. You will use this key to configure the LLM integration.

OpenAI API Keys require purchasing credits to use. You can succeed with this tutorial with minimal credits; in our experience, less than $1 will suffice.

Optional

You can opt to use real API services for your tools, or use provided mock functions.

- A free RapidAPI Sky Scrapper API Key saved securely where you can access it. You will use this to search for flights.

- A free Stripe Account with a configured sandbox. You will use this to generate fake invoices for the flights that are being booked.

Concepts

Additionally, this tutorial assumes you have basic familiarity with:

Programming Concepts

- Temporal fundamentals such as Workflows, Activities, Workers, Signals, and Queries

- Python fundamentals such as functions, classes, async/await syntax, and virtual environments

- Command line interface and running commands in a terminal or command prompt

- REST API concepts including HTTP requests and JSON responses

- How to set and use environment variables in your operating system

AI Concepts

- A Mental Model for Agentic AI Applications

- Building an agentic system that's actually production ready

- Why Agentic Flows Need Distributed Systems

Setting up your development environment

Before you start coding, you need to set up your Python developer environment. In this step, you will set up your project structure, install the necessary Python packages, and configure the Python environment needed to build your AI agent.

First, create your project using uv:

uv init temporal-ai-agent --python ">=3.9"

uv is a modern Python project and packaging tool that sets up a project structure for you.

Running this command creates the following default Python package structure for you:

temporal-ai-agent/

├── .gitignore

├── .python-version

├── main.py

├── README.md

├── pyproject.toml

└── uv.lock

It automatically runs a git init command for you, provides you with the default .gitignore for Python, creates a .python-version file that has the project's default Python version, a README.md, a Hello World main.py program, and a pyproject.toml file for managing the projects packages and environment.

Next, change directories into your newly created project:

cd temporal-ai-agent

You won't need the main.py file, so delete it:

rm main.py

Next, create your virtual environment by running the following command:

uv venv

This creates a virtual environment named .venv in the current working directory.

Now that you have a virtual environment created, add the dependencies needed to build your AI agent system:

uv add python-dotenv fastapi jinja2 litellm stripe temporalio uvicorn requests

This installs all the necessary packages:

python-dotenv- For loading environment variables from a.envfilefastapianduvicorn- Web framework and server for the API backendjinja2- Template enginelitellm- Unified interface for different language model providersstripe- Payment processing library for the invoice generation demotemporalio- The Temporal Python SDKrequests- HTTP library for API calls

Finally, add the following lines to the end of your pyproject.toml file:

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

# Tell hatchling what to include

[tool.hatch.build.targets.wheel]

packages = ["activities", "api", "models", "prompts", "shared", "tools", "workflows"]

This configures uv as to which packages to include and enable for execution.

You will create these packages later in the tutorial.

The pyproject.toml is complete and will need no more revisions. You can review the complete file and copy the code here

[project]

name = "temporal-ai-agent"

version = "0.1.0"

description = "Add your description here"

readme = "README.md"

requires-python = ">=3.9"

dependencies = [

"python-dotenv>=1.0.0",

"fastapi>=0.115.12",

"jinja2>=3.1.6",

"litellm>=1.72.2",

"stripe>=12.2.0",

"temporalio>=1.12.0",

"uvicorn>=0.34.3",

"requests>=2.32.4",

]

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

# Tell hatchling what to include

[tool.hatch.build.targets.wheel]

packages = ["activities", "api", "models", "prompts", "shared", "tools", "workflows"]

Next, create a .env file to store your configuration:

touch .env

Next, copy the following configuration to your .env file.

# LLM Configuration

LLM_MODEL=openai/gpt-4o

LLM_KEY=YOUR_OPEN_AI_KEY

# Set if the user should click a Confirm button in the UI to allow the tool

# to execute

SHOW_CONFIRM=True

# Temporal Configuration

TEMPORAL_ADDRESS=localhost:7233

TEMPORAL_NAMESPACE=default

TEMPORAL_TASK_QUEUE=agent-task-queue

# (Optional) - Uncomment both lines and set RAPIDAPI_KEY if you plan on

# using the real flights API

# RAPIDAPI_KEY=YOUR_RAPID_API_KEY

# RAPIDAPI_HOST_FLIGHTS=sky-scrapper.p.rapidapi.com

# (Optional) - Uncomment and set STRIPE_API_KEY if you plan on using the Stripe

# API to generate a fake invoice

# STRIPE_API_KEY=YOUR_STRIPE_API_KEY

# Uncomment if connecting to Temporal Cloud using mTLS (not needed for local dev server)

# TEMPORAL_TLS_CERT='path/to/cert.pem'

# TEMPORAL_TLS_KEY='path/to/key.pem'

# Uncomment if connecting to Temporal Cloud using API key (not needed for local dev server)

# TEMPORAL_API_KEY=abcdef1234567890

Once copied, replace YOUR_OPEN_API_KEY with your OpenAI API key.

Setting SHOW_CONFIRM=True requires the user to confirm each tool prior to it being executed.

This will allow you to see what the agent is doing step by step.

These are the only two mandatory variables to set.

This tutorial provides both an ability to create pseudo tools that perform simulations, or tools that use external APIs to achieve their goals.

If you plan on using the RapidAPI SkyScraper API to look up flight data or the Stripe API to generate an invoice, you can uncomment these lines and provide the API keys here.

Additionally, if you plan on connecting to Temporal Cloud, you will need to update the TEMPORAL_ADDRESS and TEMPORAL_NAMESPACE parameters to connect to your Temporal Cloud instance.

You will also need to uncomment and set the TEMPORAL_TLS or TEMPORAL_API_KEY variables, depending on which authentication method you are using.

As this project is using LiteLLM, it supports various different LLM providers. This tutorial will use OpenAI's gpt-4o, but you are welcome to use whichever LLM you wish, so long as it is supported by LiteLLM.

At this point, you have configured your developer environment to include a Python project managed by uv with all required dependencies to build a Temporal powered agentic AI, and all necessary environment variables.

Now that you have set up your developer environment, you will build the tools that your agent will use to perform the various tasks it needs to accomplish its goal.

Constructing the agent toolkit

In this step, you will acquire the tools that will be available to your agent. Agents are aware of the tools they have available to them while attempting to achieve their goal. The agent will evaluate which tools are available and execute a tool if the agent believes it will provide the result the agent needs to progress in its task.

These tools can take various forms, but in this tutorial they're implemented as a series of independent Python scripts that provide data in a specific format that the agent can process.

There are three tools: a find_events tool, a search_flights tool, and a create_invoice tool.

The LLM will decide when to use each tool as it interacts with the user who is trying to find an event and book a flight to attend it.

You could implement these tools yourself, or you could download a tool and provide it to an agent.

For this tutorial, you will download the tools directly from the companion GitHub repository.

Setting up the tools package

To get started, first create the directory for your tools modules:

mkdir tools

Then change directories into it:

cd tools

However, for this to be an importable tools package, you will need to add a __init__.py file.

It can be blank for now, so create it with the following command:

touch __init__.py

Now that you have set up the structure for your tools package, you'll acquire and test the tools needed to have the agent succeed with its goal.

Acquiring the find_events tool

The find_events tool searches for events within a given city during a certain time of year.

The tool takes a month and city as inputs and provides events for not only the month that was provided, but the months before and after the given month as well.

The LLM will use this tool to search for events when helping the user plan their trip.

This tool doesn't use an API, but rather simulates looking events up in a data store using mock data.

First, create a data directory within the tools directory to store the sample event data and change directories into it:

mkdir data

cd data

Next, run the following command to download the sample data from the companion GitHub repository:

curl -o find_events_data.json https://raw.githubusercontent.com/temporal-community/tutorial-temporal-ai-agent/main/tools/data/find_events_data.json

You can confirm you have the correct data by running the following command to sample the file and comparing it to the output:

head -8 find_events_data.json

{

"New York": [

{

"eventName": "Winter Jazzfest",

"dateFrom": "2025-01-10",

"dateTo": "2025-01-19",

"description": "A multi-venue jazz festival featuring emerging and established artists performing across Greenwich Village venues."

},

If the dates appear to be far in the past, don't worry.

There is logic within the find_events tool that automatically adjusts the date to ensure that no dates can be presented that are in the past.

Next, change directories back up one directory to the tools directory:

cd ..

Now that you have the data, download the find_events tool using the command:

curl -o find_events.py https://raw.githubusercontent.com/temporal-community/tutorial-temporal-ai-agent/main/tools/find_events.py

Open the file and explore the logic; you should never download a file from the internet and just trust it.

Try to answer the following questions about the codebase:

- Where in the code does it determine the adjacent months?

- How does the tool prevent the data from

find_events_data.jsonbeing presented with a date that has already passed? - What is the schema for the data that will be returned?

Once you have finished reviewing the code, navigate to the root directory of your project and create a scripts directory for testing this tool. The root of your project should be one level higher your current directory, so you can get there by running the following command:

cd ..

Create the scripts directory:

mkdir scripts

Now create a test script named find_events_test.py in the scripts directory and add the following to test your script:

import json

from tools.find_events import find_events

if __name__ == "__main__":

search_args = {"city": "Austin", "month": "December"}

results = find_events(search_args)

print(json.dumps(results, indent=2))

This script will check for events in Austin, TX in the month of December.

From the root of your project, run the script using the following command to verify it's configured correctly:

uv run scripts/find_events_test.py

You should see the following output:

{

"note": "Returning events from December plus one month either side (i.e., November, December, January).",

"events": [

{

"city": "Austin",

"eventName": "Austin Celtic Festival",

"dateFrom": "2025-11-08",

"dateTo": "2025-11-09",

"description": "Celebration of Celtic culture featuring traditional music, dance, crafts, and Irish food.",

"month": "previous month"

},

{

"city": "Austin",

"eventName": "Trail of Lights",

"dateFrom": "2025-12-05",

"dateTo": "2025-12-23",

"description": "Holiday light display in Zilker Park featuring festive decorations, food vendors, and family activities.",

"month": "requested month"

}

]

}

Now that you have the find_events tool functioning, it's time to do the same for the search_flights tool.

Acquiring the search_flights tool

The search_flights tool searches roundtrip flights to a destination.

The tool takes the origin, destination, arrival date, and departure date as arguments and returns flight data containing details such as carrier, price, and flight code for the flights.

The LLM will use this tool to find flights to the location once the user has selected the dates they wish to travel.

This tool can either use the RapidAPI SkyScraper API if you have an API key configured in your .env file, or it will generate mock data if it's unable to detect the API key.

First, change directories into the tools directory:

cd tools

Then get the tool by running the following command to download it from the companion GitHub repository:

curl -o search_flights.py https://raw.githubusercontent.com/temporal-community/tutorial-temporal-ai-agent/main/tools/search_flights.py

Next, familiarize yourself with the tool by reviewing the code. Try to answer the following questions about the code:

- What is the purpose of the

search_flightsfunction? (It's not as straightforward of an answer as it may appear) - How many REST API calls does is it take to call complete the real flight API search?

Once you have finished reviewing the code, you will test it.

Create another test within the scripts directory named search_flights_test.py and add the following code:

import json

from tools.search_flights import search_flights

if __name__ == "__main__":

flights = search_flights(

{

"origin": "ORD",

"destination": "DFW",

"dateDepart": "2025-09-20",

"dateReturn": "2025-09-22",

}

)

print(json.dumps(flights, indent=2))

This test searches for a flight from Chicago to Dallas-Fort Worth. However, since this tool can operate in either a mock mode or live API mode, there are two ways to verify it.

Testing the mocked search_flight tool

Let's start by testing it without the RapidAPI key.

If you have that set in your .env file, comment it out for now, or skip this step.

Change directories back to the root of the project and run the test using the following command:

cd ..

uv run scripts/search_flights_test.py

Your output will vary, as the mock data function randomly generates results. The output should, however, look something like this with more items in the results list:

{

"currency": "USD",

"destination": "DFW",

"origin": "ORD",

"results": [

{

"operating_carrier": "Southwest Airlines",

"outbound_flight_code": "WN427",

"price": 462.43,

"return_flight_code": "WN744",

"return_operating_carrier": "Southwest Airlines"

}

]

}

If you aren't planning on using the Sky Scrapper API, you can skip this next step and continue if you'd like.

Testing the Sky Scrapper powered search_flights tool

Testing the API-powered version of the tool is similar to testing the mocked version.

First, if you haven't uncommented the RAPID_API lines in your .env file and added your API key, do this before running the test.

You will also need to uncomment the RAPIDAPI_HOST_FLIGHTS environment variable as this is the endpoint the tool will be accessing.

RAPIDAPI_KEY=YOUR_RAPID_API_KEY

RAPIDAPI_HOST_FLIGHTS=sky-scrapper.p.rapidapi.com

Next, review the code in scripts/search_flights_test.py and make sure that the dateDepart and dateReturn dates are both in the future.

At this point you have no way of determining if the dates are in the past, and the API will return an error if you try to search for flights in the past.

Once you've reviewed the code, make sure you are at the root directory of the project.

If are still in the scripts directory, run the following command:

cd ..

Then run the test using the following command:

uv run scripts/search_flights_test.py

If you've changed the dates or cities, you may see different results, but the format should be similar to this:

Searching for: ORD

Searching for: DFW

{

"origin": "ORD",

"destination": "DFW",

"currency": "USD",

"results": [

{

"outbound_flight_code": "NK824",

"operating_carrier": "Spirit Airlines",

"return_flight_code": "NK828",

"return_operating_carrier": "Spirit Airlines",

"price": 119.98

},

]

}

If the API gives you cryptic error messages such as Something went wrong or returns an incomplete response, you can try running it a few times and see if you get a different response.

Now that you have finished testing the search_flights tool, you can add the final tool to the agent's toolkit.

Acquiring the create_invoice tool

The final tool is the create_invoice tool.

The tool takes the customer's email and trip information such as the cost of the flight, the description of the event, the number of days until the invoice is due, and generates a sample invoice for that user showing the details of the flight and the cost.

The LLM will use this tool to invoice the customer once the customer has confirmed their travel plans.

This tool can either use the Stripe API if you have an API key configured in your .env file, or it will generate a mock invoice if it is unable to detect an API key.

First, change directories into the tools directory again:

cd tools

Then get the tool by running the following command to download it from the companion GitHub repository:

curl -o create_invoice.py https://raw.githubusercontent.com/temporal-community/tutorial-temporal-ai-agent/main/tools/create_invoice.py

Next, familiarize yourself with the tool by reviewing the code. Try to answer the following questions about the code:

- What customer related verification does the tool do before creating the invoice?

- What does the tool do if this verification fails?

Once you have finished reviewing the code, test it.

Create another test within the scripts directory named create_invoice_test.py and add the following code:

from tools.create_invoice import create_invoice

if __name__ == "__main__":

args_create = {

"email": "ziggy.tardigrade@example.com",

"amount": 150.00,

"description": "Flight to Replay",

"days_until_due": 7,

}

invoice_details = create_invoice(args_create)

print(invoice_details)

However, since this tool can operate in either a mock mode or live API mode, there are two ways to verify it.

Testing the mocked create_invoice tool

Start by testing it without the Stripe key.

If you have it set in your .env file, comment it out for now, or skip this step.

Change directories back to the root project directory and run the test using the following command:

cd ..

uv run scripts/create_invoice_test.py

The output should be:

[CreateInvoice] Creating invoice with: {'email': 'ziggy.tardigrade@example.com', 'amount': 150.0, 'description': 'Flight to Replay', 'days_until_due': 7}

{'invoiceStatus': 'generated', 'invoiceURL': 'https://pay.example.com/invoice/12345', 'reference': 'INV-12345'}

If you aren't planning on using the Stripe API, you can skip this next step and continue if you'd like.

Testing the Stripe-powered create_invoice tool

Testing the Stripe powered version of the tool is nearly identical to testing the mocked version of the tool.

First, if you haven't uncommented the STRIPE_API_KEY lines in your .env file and added your API key, do this before running the test.

STRIPE_API_KEY=YOUR_STRIPE_API_KEY

Make sure you have set up your Stripe account as a sandbox and are using an API key from there. If it is your first time setting up a Stripe account and you haven't added any billing information, this will be the default. Otherwise the invoices will be real.

Make sure you aren't in the scripts directory any more.

If you are, run the following command to get back to the root directory of the project:

cd ..

Then run the test using the following command the same way you would the mocked version:

uv run scripts/create_invoice_test.py

The result will contain an invoiceURL, as well as the status of the invoice and a reference.

{'invoiceStatus': 'open', 'invoiceURL': 'https://invoice.stripe.com/i/acct_1RMFbIQej3CO0i8K/test_YWNjdF8xUk1GYklRZWozQ08wThLLF9TVJpYWZ2WXREVXZrcDJqMGhIM0hSdkVEa2hVYmM0LDE0MTI2NjEwNg0200VaZpBdSc?s=ap', 'reference': 'FEUS4MXS-0001'}

By following that invoice link in a browser, Stripe will present you with a sample invoice in your sandbox environment.

Before you move on, verify that you have created all the necessary files in the correct structure.

So far you've implemented and tested the agents tools. Verify your directory structure and files look and are named appropriately according to the following diagram before continuing:

temporal-ai-agent/

├── .env

├── .gitignore

├── .python-version

├── README.md

├── pyproject.toml

├── uv.lock

├── scripts/

│ ├── create_invoice_test.py

│ ├── find_events_test.py

│ └── search_flights_test.py

└── tools/

├── __init__.py

├── create_invoice.py

├── find_events.py

├── search_flights.py

└── data/

└── find_events_data.json

And those are the three tools in this agent's toolkit to achieve its goal. Other goals may have different tools, and you could add more tools. Next, you'll make the tools available to the agent to use.

Exposing the tools to the agent

Now that you have the tools necessary to complete the agent's goal, you need to implement a way to inform the agent that these tools are available. To do this, you'll create a tool registry. The tool registry will contain a definition of each tool, along with information such as the tool's name, description, and what arguments it accepts.

However, before you create the registry, you should define the tool definition and tool argument as models that can be shared across your codebase.

Defining the core models

Defining the tool arguments, tool definition, and agent goal as custom types allows for better reusability and type hinting.

Temporal also recommends passing a single object between functions, and requires these objects to be serializable.

Given these requirements, you'll implement the ToolArgument and ToolDefinition types as a Python dataclass.

Before you define these models, navigate to the root directory of your project and create the models directory:

mkdir models

Since this directory will be imported throughout your project, it needs to be configured as a module.

To do this, create a blank __init__.py file by running the following command:

touch models/__init__.py

Next, create the file core.py. This file will contain the tool argument and definition models used to in your agent.

Open models/core.py and add the following imports:

from dataclasses import dataclass

from typing import List

Next, add the ToolArgument dataclass to the file:

@dataclass

class ToolArgument:

name: str

type: str

description: str

An instance of this dataclass will represent an argument that your tool can accept, including the name of the argument, a description of what the argument represents, and the type of the argument, such as an int or str.

Next, add the ToolDefinition dataclass to the file:

@dataclass

class ToolDefinition:

name: str

description: str

arguments: List[ToolArgument]

This will hold information about the tool that's provided to the agent so it can determine what action to take.

It defines the name of the tool, a description of what the can do, and an argument list. This list is composed of your ToolArgument objects.

Now that you have the appropriate model to define your tools, you can create a registry of the tools for the agent to access.

Creating the tool registry

Agents use LLMs to determine what action to take and then execute a tool from their toolkit. However, you have to make those tools available to the agent. Now that you have structure for defining your tools, you should create a registry that your agent reads to load the available tools.

Navigate back to the tools directory and create the file tools/tool_registry.py.

In this file you will define all of your tools using the models you defined in the previous step.

First, add the following import to the file to import the models:

from models.core import ToolArgument, ToolDefinition

Next, add the first part of the ToolDefinition for the find_events tool:

find_events_tool = ToolDefinition(

name="FindEvents",

description="Find upcoming events to travel to a given city (e.g., 'New York City') and a date or month. "

"It knows about events in North America only (e.g. major North American cities). "

"It will search 1 month either side of the month provided. "

"Returns a list of events. ",

# arguments to be inserted here in the next step

)

This defines your tool using the ToolDefinition model you defined, gives it a name and a description that the LLM can use to understand the tool and also use as a prompt.

Next you need to add the arguments to this instantiation.

The arguments in the ToolDefinition model were defined as a List[ToolArgument], so you may have multiple arguments within your list.

To complete the definition, add the following code to your find_events_tool instantiation to add the arguments:

arguments=[

ToolArgument(

name="city",

type="string",

description="Which city to search for events",

),

ToolArgument(

name="month",

type="string",

description="The month to search for events (will search 1 month either side of the month provided)",

),

]

The find_events tool requires two arguments, the city and month in which to search, and it also provides a string description so the LLM would know how to prompt the user if an argument is missing.

Bringing it all together, the complete ToolDefinition would be:

find_events_tool = ToolDefinition(

name="FindEvents",

description="Find upcoming events to travel to a given city (e.g., 'New York City') and a date or month. "

"It knows about events in North America only (e.g. major North American cities). "

"It will search 1 month either side of the month provided. "

"Returns a list of events. ",

arguments=[

ToolArgument(

name="city",

type="string",

description="Which city to search for events",

),

ToolArgument(

name="month",

type="string",

description="The month to search for events (will search 1 month either side of the month provided)",

),

],

)

Now that you have the first tool defined in your registry, implement the remaining tool definitions.

Add the following code to register the search_flights tool.

The structure is similar to the find_events tool, except that search_flights requires more arguments, to search for the origin, destination, departure date, return date, and confirmation status.

These arguments are a direct mapping of the required parameters to the RAPIDAPI REST API.

When creating a tool that maps to an API, be sure to include that APIs required parameters as ToolArguments.

search_flights_tool = ToolDefinition(

name="SearchFlights",

description="Search for return flights from an origin to a destination within a date range (dateDepart, dateReturn). "

"You are allowed to suggest dates from the conversation history, but ALWAYS ask the user if ok.",

arguments=[

ToolArgument(

name="origin",

type="string",

description="Airport or city (infer airport code from city and store)",

),

ToolArgument(

name="destination",

type="string",

description="Airport or city code for arrival (infer airport code from city and store)",

),

ToolArgument(

name="dateDepart",

type="ISO8601",

description="Start of date range in human readable format, when you want to depart",

),

ToolArgument(

name="dateReturn",

type="ISO8601",

description="End of date range in human readable format, when you want to return",

),

ToolArgument(

name="userConfirmation",

type="string",

description="Indication of the user's desire to search flights, and to confirm the details "

+ "before moving on to the next step",

),

],

)

And then add the following code to register the create_invoice tool.

This tool requires three arguments: the amount to be paid, the details of the trip, and a user confirmation.

create_invoice_tool = ToolDefinition(

name="CreateInvoice",

description="Generate an invoice for the items described for the total inferred by the conversation history so far. Returns URL to invoice.",

arguments=[

ToolArgument(

name="amount",

type="float",

description="The total cost to be invoiced. Infer this from the conversation history.",

),

ToolArgument(

name="tripDetails",

type="string",

description="A description of the item details to be invoiced, inferred from the conversation history.",

),

ToolArgument(

name="userConfirmation",

type="string",

description="Indication of user's desire to create an invoice",

),

],

)

You now have a tool registry your agent imports to inform it of what tools it has available to execute.

Finally, you need to create a mapping between the tool registered in tool_registry.py with the actual functions the Activity will invoke during Workflow execution.

Mapping the registry to the functions

Your agent will use the registry to identify which tool it should use, but it still needs to translate the string name of the tool to the function definition the code will execute.

You will modify the code in tool_registry to add this functionality.

First, add the following imports with the other imports in tool_registry.py:

from typing import Any, Callable, Dict

from tools.create_invoice import create_invoice

from tools.find_events import find_events

from tools.search_flights import search_flights

These handle the appropriate typings, as well as import the function from each of the tool files.

Next, go to the bottom of the file after the previous tool definitions and add the code to map the string representation of the ToolDefinition to the function:

# Dictionary mapping tool names to their handler functions

TOOL_HANDLERS: Dict[str, Callable[..., Any]] = {

"SearchFlights": search_flights,

"CreateInvoice": create_invoice,

"FindEvents": find_events,

}

Finally, add a function named get_handler that returns the function given the tool name:

def get_handler(tool_name: str) -> Callable[..., Any]:

"""Get the handler function for a given tool name.

Args:

tool_name: The name of the tool to get the handler for.

Returns:

The handler function for the specified tool.

Raises:

ValueError: If the tool name is not found in the registry.

"""

if tool_name not in TOOL_HANDLERS:

raise ValueError(f"Unknown tool: {tool_name}")

return TOOL_HANDLERS[tool_name]

You have now successfully implemented a structured model for expressing tools available to your AI agent. This is necessary for building a robust, capable agent.

The tools/tool_registry.py is complete and will need no more revisions. You can review the complete file and copy the code here.

from typing import Any, Callable, Dict

from models.core import ToolArgument, ToolDefinition

from tools.create_invoice import create_invoice

from tools.find_events import find_events

from tools.search_flights import search_flights

find_events_tool = ToolDefinition(

name="FindEvents",

description="Find upcoming events to travel to a given city (e.g., 'New York City') and a date or month. "

"It knows about events in North America only (e.g. major North American cities). "

"It will search 1 month either side of the month provided. "

"Returns a list of events. ",

arguments=[

ToolArgument(

name="city",

type="string",

description="Which city to search for events",

),

ToolArgument(

name="month",

type="string",

description="The month to search for events (will search 1 month either side of the month provided)",

),

],

)

search_flights_tool = ToolDefinition(

name="SearchFlights",

description="Search for return flights from an origin to a destination within a date range (dateDepart, dateReturn). "

"You are allowed to suggest dates from the conversation history, but ALWAYS ask the user if ok.",

arguments=[

ToolArgument(

name="origin",

type="string",

description="Airport or city (infer airport code from city and store)",

),

ToolArgument(

name="destination",

type="string",

description="Airport or city code for arrival (infer airport code from city and store)",

),

ToolArgument(

name="dateDepart",

type="ISO8601",

description="Start of date range in human readable format, when you want to depart",

),

ToolArgument(

name="dateReturn",

type="ISO8601",

description="End of date range in human readable format, when you want to return",

),

ToolArgument(

name="userConfirmation",

type="string",

description="Indication of the user's desire to search flights, and to confirm the details "

+ "before moving on to the next step",

),

],

)

create_invoice_tool = ToolDefinition(

name="CreateInvoice",

description="Generate an invoice for the items described for the total inferred by the conversation history so far. Returns URL to invoice.",

arguments=[

ToolArgument(

name="amount",

type="float",

description="The total cost to be invoiced. Infer this from the conversation history.",

),

ToolArgument(

name="tripDetails",

type="string",

description="A description of the item details to be invoiced, inferred from the conversation history.",

),

ToolArgument(

name="userConfirmation",

type="string",

description="Indication of user's desire to create an invoice",

),

],

)

# Dictionary mapping tool names to their handler functions

TOOL_HANDLERS: Dict[str, Callable[..., Any]] = {

"SearchFlights": search_flights,

"CreateInvoice": create_invoice,

"FindEvents": find_events,

}

def get_handler(tool_name: str) -> Callable[..., Any]:

"""Get the handler function for a given tool name.

Args:

tool_name: The name of the tool to get the handler for.

Returns:

The handler function for the specified tool.

Raises:

ValueError: If the tool name is not found in the registry.

"""

if tool_name not in TOOL_HANDLERS:

raise ValueError(f"Unknown tool: {tool_name}")

return TOOL_HANDLERS[tool_name]

Before moving on to the next section, verify that your file and directory structure is correct.

You just implemented a model for defining your tools in a way that your agent could discover and use them. Verify that your directory structure and file names are correct according to the following diagram before continuing:

temporal-ai-agent/

├── .env

├── .gitignore

├── .python-version

├── README.md

├── pyproject.toml

├── uv.lock

├── models/

│ ├── __init__.py

│ └── core.py

├── scripts/

│ ├── create_invoice_test.py

│ ├── find_events_test.py

│ └── search_flights_test.py

└── tools/

├── __init__.py

├── create_invoice.py

├── find_events.py

├── search_flights.py

├── tool_registry.py

└── data/

└── find_events_data.json

In the next step, you will use the tool definitions you just created to define the agent's goal.

Designating the agent's goal

An agent's goal is the definition of the task it's trying to achieve.

It achieves this goal by executing tools, analyzing the results, and using an LLM to decide what to do next.

In this tutorial you will define the goal as a combination of several fields, including a description, a starter prompt, an example conversation history, and the list of tools the agent can use to achieve its goal.

Now that you've defined the ToolDefinition that will be available for your agent, you can define the AgentGoal type and create your agent's goal.

Defining the AgentGoal type

To define the AgentGoal type, open models/core.py and add the following code:

@dataclass

class AgentGoal:

agent_name: str

tools: List[ToolDefinition]

description: str

starter_prompt: str

example_conversation_history: str

This dataclass defines your AgentGoal as a combination of a few attributes:

agent_name- A human readable name for the agenttools- A list of tools, defined asToolDefinitiontypes, that the agent can use to achieve its goaldescription- A description of the goal, in a bulleted list format specifying how to achieve it.starter_prompt- A starter prompt for the AI agent to runexample_conversation_history- A sample conversation history of what a successful interaction with this agent would look like

The models/core.py is complete and will need no more revisions. You can review the complete file and copy the code here.

Hover your cursor over the code block to reveal the copy-code option.

from dataclasses import dataclass

from typing import List

@dataclass

class ToolArgument:

name: str

type: str

description: str

@dataclass

class ToolDefinition:

name: str

description: str

arguments: List[ToolArgument]

@dataclass

class AgentGoal:

id: str

category_tag: str

agent_name: str

agent_friendly_description: str

tools: List[ToolDefinition]

description: str = "Description of the tools purpose and overall goal"

starter_prompt: str = "Initial prompt to start the conversation"

example_conversation_history: str = (

"Example conversation history to help the AI agent understand the context of the conversation"

)

Now that you have the type available to define the goal, you will implement the goal for your agent.

Implementing the goal registry

Similar to implementing the tool_registry, next you will implement a goal_registry to define your agent's goal and make it available to the Workflow.

You will do this by creating an instance of your AgentGoal type for every goal you wish to implement.

For this tutorial you will only implement a single goal, named goal_event_flight_invoice, but you may want to use this framework going forward to create your own agent goals at a later date.

To implement your agent's goal, create the file tools/goal_registry.py and add the following imports to the file:

import tools.tool_registry as tool_registry

from models.core import AgentGoal

To create the goal, first create an instance of the AgentGoal dataclass and add the first parameter, agent_name, to identify the goal:

goal_event_flight_invoice = AgentGoal(

agent_name="North America Event Flight Booking",

# ...

Next, pass in the ToolDefnitions that the agent is allowed to use to accomplish its goal to the tools parameter.

Add the following code as the next parameter:

# ...

tools=[

tool_registry.find_events_tool,

tool_registry.search_flights_tool,

tool_registry.create_invoice_tool,

],

# ...

The following parameter defines a detailed description of what the goal is and the ideal path for the agent to take to achieve its goal. Add the following code to the file:

# ...

description="Help the user gather args for these tools in order: "

"1. FindEvents: Find an event to travel to "

"2. SearchFlights: search for a flight around the event dates "

"3. CreateInvoice: Create a simple invoice for the cost of that flight ",

# ...

The next parameter provides a starter prompt for the agent, detailing how it should begin its interaction with every user. A starter prompt is the first prompt an agent sees, and gives the initial set of instructions. Think of this an initialization function for the conversation. A common format is to provide a greeting, explain your purpose, and prompt the user for information the agent needs to succeed.

Add the following code to define your prompt:

# ...

starter_prompt="Welcome me, give me a description of what you can do, then ask me for the details you need to do your job.",

# ...

Finally, draft an example conversation of a successful interaction with your agent to pass in.

LLMs perform better when they have an example of expected output, so providing this aids the LLM in its goal.

Since this is a str type, but the conversation is long, you will define each statement as a line in a list and then use "\n ".join() to create a string from your conversation.

Add the conversation as the final parameter.

# ...

example_conversation_history="\n ".join(

[

"user: I'd like to travel to an event",

"agent: Sure! Let's start by finding an event you'd like to attend. I know about events in North American cities. Could you tell me which city and month you're interested in?",

"user: nyc in may please",

"agent: Great! Let's find an events in New York City in May.",

"user_confirmed_tool_run: <user clicks confirm on FindEvents tool>",

"tool_result: { 'event_name': 'Frieze New York City', 'event_date': '2023-05-01' }",

"agent: Found an event! There's Frieze New York City on May 1 2025, ending on May 14 2025. Would you like to search for flights around these dates?",

"user: Yes, please",

"agent: Let's search for flights around these dates. Could you provide your departure city?",

"user: San Francisco",

"agent: Thanks, searching for flights from San Francisco to New York City around 2023-02-25 to 2023-02-28.",

"user_confirmed_tool_run: <user clicks confirm on SearchFlights tool>"

'tool_result: results including {"flight_number": "AA101", "return_flight_number": "AA102", "price": 850.0}',

"agent: Found some flights! The cheapest is AA101 for $850. Would you like to generate an invoice for this flight?",

"user_confirmed_tool_run: <user clicks confirm on CreateInvoice tool>",

'tool_result: { "status": "success", "invoice": { "flight_number": "AA101", "amount": 850.0 }, invoiceURL: "https://example.com/invoice" }',

"agent: Invoice generated! Here's the link: https://example.com/invoice",

]

),

)

The tools/goal_registry.py is complete and will need no more revisions. You can review the complete file and copy the code here.

Hover your cursor over the code block to reveal the copy-code option.

import tools.tool_registry as tool_registry

from models.core import AgentGoal

goal_event_flight_invoice = AgentGoal(

agent_name="North America Event Flight Booking",

tools=[

tool_registry.find_events_tool,

tool_registry.search_flights_tool,

tool_registry.create_invoice_tool,

],

description="Help the user gather args for these tools in order: "

"1. FindEvents: Find an event to travel to "

"2. SearchFlights: search for a flight around the event dates "

"3. CreateInvoice: Create a simple invoice for the cost of that flight ",

starter_prompt="Welcome me, give me a description of what you can do, then ask me for the details you need to do your job.",

example_conversation_history="\n ".join(

[

"user: I'd like to travel to an event",

"agent: Sure! Let's start by finding an event you'd like to attend. I know about events in North American cities. Could you tell me which city and month you're interested in?",

"user: sydney in may please",

"agent: Great! Let's find an events in New York City in May.",

"user_confirmed_tool_run: <user clicks confirm on FindEvents tool>",

"tool_result: { 'event_name': 'Vivid New York City', 'event_date': '2023-05-01' }",

"agent: Found an event! There's Vivid New York City on May 1 2025, ending on May 14 2025. Would you like to search for flights around these dates?",

"user: Yes, please",

"agent: Let's search for flights around these dates. Could you provide your departure city?",

"user: San Francisco",

"agent: Thanks, searching for flights from San Francisco to New York City around 2023-02-25 to 2023-02-28.",

"user_confirmed_tool_run: <user clicks confirm on SearchFlights tool>"

'tool_result: results including {"flight_number": "AA101", "return_flight_number": "AA102", "price": 850.0}',

"agent: Found some flights! The cheapest is AA101 for $850. Would you like to generate an invoice for this flight?",

"user_confirmed_tool_run: <user clicks confirm on CreateInvoice tool>",

'tool_result: { "status": "success", "invoice": { "flight_number": "AA101", "amount": 850.0 }, invoiceURL: "https://example.com/invoice" }',

"agent: Invoice generated! Here's the link: https://example.com/invoice",

]

),

)

Now that you have defined your agent's goal, you can begin implementing the Activities.

Before moving on to the next section, verify your files and directory structure is correct.

temporal-ai-agent/

├── .env

├── .gitignore

├── .python-version

├── README.md

├── pyproject.toml

├── uv.lock

├── models/

│ ├── __init__.py

│ └── core.py

├── scripts/

│ ├── create_invoice_test.py

│ ├── find_events_test.py

│ └── search_flights_test.py

└── tools/

├── __init__.py

├── create_invoice.py

├── find_events.py

├── goal_registry.py

├── search_flights.py

├── tool_registry.py

└── data/

└── find_events_data.json

Building Temporal Activities to execute non-deterministic agent code

Now that you have built the agent's goal, and the tools it needs to achieve it, you can start building the agent code. In this step, you will create Activities that execute code in your AI agent that can behave non-deterministically, such as making the LLM calls or calling tools. Because tools can call out to external services, have the possibility to fail, be rate limited, or perform other non-deterministic operations, it's safer to always call them in an Activity. When an Activity fails, it's automatically retried by default until it succeeds or is canceled.

Another added benefit of executing your tool as an Activity is that after the Activity completes, the result is saved to an Event History managed by Temporal. If your application were to then crash after executing a few tools, it could reconstruct the state of the execution and use the previous execution's results, without having to re-execute the tools. This provides durability to your agent for intermittent issues, which are common in distributed systems.

Before you can proceed to creating the Activities, however, you need to create the custom types that you'll use for Activity communication.

Recall that Workflow and Activity best practices recommend only passing a single dataclass parameter.

This helps with the evolution of parameters as well as ensuring type safety.

Creating the requests data models

Your agent will require specific types for input and output for both the Activities and the Workflow.

You will put all request-based models in a new file in the models directory named requests.py.

First, open models/requests.py and add the following import statements:

from dataclasses import dataclass, field

from typing import Any, Deque, Dict, List, Literal, Optional, TypedDict, Union

from models.core import AgentGoal

You will use these when creating the new types for your agent.

Next, add the following single attribute data types to the file:

Message = Dict[str, Union[str, Dict[str, Any]]]

ConversationHistory = Dict[str, List[Message]]

NextStep = Literal["confirm", "question", "done"]

CurrentTool = str

These types are used to compose other, multi-attribute dataclass types, or sent as a single parameter.

They are used in the following context of the agent:

Message- A nested dictionary that represents one turn of a conversation between the LLM and the userConversationHistory- A dictionary containing anstrkey and aListofMessagesthat keeps track of the conversation between the LLM and the userNextStep- ALiteralcontaining three options, picked by the agent to decide the next action to take and interpreted by the WorkflowCurrentTool- Anstrrepresentation of the current tool the agent is using

Next, add the following dataclasses for handling the primary agent parameters:

@dataclass

class AgentGoalWorkflowParams:

conversation_summary: Optional[str] = None

prompt_queue: Optional[Deque[str]] = None

@dataclass

class CombinedInput:

agent_goal: AgentGoal

tool_params: AgentGoalWorkflowParams

The AgentWorkflowParams type contains a summary of the conversation and a queue of prompts that the agent needs to process via the LLM.

The CombinedInput type contains the agent's goal and the parameters.

This type is the input that is passed to the main agent Workflow and is used to start the initial Workflow Execution.

Next, add the dataclass that handles the input for calling the LLM for tool planning:

@dataclass

class ToolPromptInput:

prompt: str

context_instructions: str

ToolPromptInput contains the prompt the Activity will issue to the LLM, along with any context that the LLM needs when executing the prompt.

To go along with the this type, you need to add types that store the results of validation of the prompt:

@dataclass

class ValidationInput:

prompt: str

conversation_history: ConversationHistory

agent_goal: AgentGoal

@dataclass

class ValidationResult:

validationResult: bool

validationFailedReason: Dict[str, Any] = field(default_factory=dict)

The ValidationInput type contains the prompt given by the user, the conversation history, and the agent's goal.

An Activity will use this type as input and validate the prompt against the agent's goal.

Conversely, the ValidationResult type will contain the results of this validation Activity and will return a boolean signifying if the prompt passed or failed, and if it did fail a reason why.

Next, add two more dataclasses for handling the input and output of reading environment variables into the Workflow:

@dataclass

class EnvLookupInput:

show_confirm_env_var_name: str

show_confirm_default: bool

@dataclass

class EnvLookupOutput:

show_confirm: bool

Since reading from the filesystem is a non-deterministic operation, this action must be done from an Activity, so it is best practice to define types to handle this in case you ever need to add more environment variables. Your environment variables will contain things such as your API keys, agent configurations, timeouts, and other settings.

Finally, add the class that will contain the next step the agent should take and the data the tool needs to execute:

class ToolData(TypedDict, total=False):

next: NextStep

tool: str

response: str

args: Dict[str, Any]

force_confirm: bool

ToolData contains the NextStep that the agent should take, along with the tool that should be used, the arguments for the tool, the response from the LLM, and a force_confirm boolean.

You may notice this type is different from the previous types, as it is a subclass of TypedDict and not a dataclass.

This is done to handle converting the type to JSON for use in the API later, because dataclasses don't support conversion of nested custom types to JSON.

The models/requests.py is complete and will need no more revisions. You can review the complete file and copy the code here.

Hover your cursor over the code block to reveal the copy-code option.

from dataclasses import dataclass, field

from typing import Any, Deque, Dict, List, Literal, Optional, TypedDict, Union

from models.core import AgentGoal

# Common type aliases

Message = Dict[str, Union[str, Dict[str, Any]]]

ConversationHistory = Dict[str, List[Message]]

NextStep = Literal["confirm", "question", "pick-new-goal", "done"]

CurrentTool = str

class ToolData(TypedDict, total=False):

next: NextStep

tool: str

response: str

args: Dict[str, Any]

force_confirm: bool

@dataclass

class AgentGoalWorkflowParams:

conversation_summary: Optional[str] = None

prompt_queue: Optional[Deque[str]] = None

@dataclass

class CombinedInput:

tool_params: AgentGoalWorkflowParams

agent_goal: AgentGoal

@dataclass

class ToolPromptInput:

prompt: str

context_instructions: str

@dataclass

class ValidationInput:

prompt: str

conversation_history: ConversationHistory

agent_goal: AgentGoal

@dataclass

class ValidationResult:

validationResult: bool

validationFailedReason: Dict[str, Any] = field(default_factory=dict)

@dataclass

class EnvLookupInput:

show_confirm_env_var_name: str

show_confirm_default: bool

@dataclass

class EnvLookupOutput:

show_confirm: bool

Now that you have your custom types defined for Activity communication, you can implement the Activities.

Creating the Activities submodule

First, create the directory structure for your Activities and make it a module:

mkdir activities

touch activities/__init__.py

Next, create the file activities/activities.py and add the necessary import statements and a statement to load the environment variables:

import inspect

import json

import os

from datetime import datetime

from typing import Sequence

from dotenv import load_dotenv

from litellm import completion

from temporalio import activity

from temporalio.common import RawValue

from models.requests import (

EnvLookupInput,

EnvLookupOutput,

ToolPromptInput,

ValidationInput,

ValidationResult,

)

load_dotenv(override=True)

This imports various system packages, Temporal libraries, the litellm package for making LLM calls, the dotenv package for loading environment variables, and a number of custom types you defined in models/requests.py.

Next, you'll create the AgentActivities class, which contains activities the agent will call to achieve its goal.

Constructing the AgentActivities Class

The AgentActivities class enables the Workflow to plan which tools to use, validate prompts, read in environment variables, and more.

To implement it, open activities/activities.py and create the class and define the __init__ method:

class AgentActivities:

def __init__(self):

"""Initialize LLM client using LiteLLM."""

self.llm_model = os.environ.get("LLM_MODEL", "openai/gpt-4")

self.llm_key = os.environ.get("LLM_KEY")

self.llm_base_url = os.environ.get("LLM_BASE_URL")

activity.logger.info(

f"Initializing AgentActivities with LLM model: {self.llm_model}"

)

if self.llm_base_url:

activity.logger.info(f"Using custom base URL: {self.llm_base_url}")

Temporal Activities can be implemented as either a function or a class and method.

As the agent requires a persistent object for communication, in this case, communicating to the LLM, it's good practice to use a class and set the parameters as part of the initialization of the Activity, so to not waste resources re-initializing the object for every LLM call.

The __init__ method reads the LLM configuration from environment variables and assigns the values to instance variables.

Implementing various helper methods

Before you implement the Activities, implement the following helper functions:

The first method sanitizes the JSON response you get from the LLM and sanitizing it to a proper JSON string. The LLM may return a string with extra whitespace, or formatted as markdown, so sanitizing the string is necessary before parsing it.

Add the following helper method to the bottom of your activities.py file:

def sanitize_json_response(self, response_content: str) -> str:

"""

Sanitizes the response content to ensure it's valid JSON.

"""

# Remove any markdown code block markers

response_content = response_content.replace("```json", "").replace("```", "")

# Remove any leading/trailing whitespace

response_content = response_content.strip()

return response_content

The second helper function takes a string as input and returns a dictionary after attempting to parse the string as valid JSON.

Add this method to the bottom of your activities.py file:

def parse_json_response(self, response_content: str) -> dict:

"""

Parses the JSON response content and returns it as a dictionary.

"""

try:

data = json.loads(response_content)

return data

except json.JSONDecodeError as e:

activity.logger.error(f"Invalid JSON: {e}")

raise

Now that you have the helper methods implemented, you can implement the Activity responsible for making LLM calls.

Implementing the Activity for making LLM calls

The agent_toolPlanner Activity handles all interactions with your chosen LLM.

It makes the call to the LLM, parses the response and returns JSON on success, and raises an Exception on failure.

Add the method header with the appropriate decorator to your activities.py file, underneath the __init__ method:

@activity.defn

async def agent_toolPlanner(self, input: ToolPromptInput) -> dict:

Next, create the messages list, which contains various dictionaries with the data necessary to perform an LLM prompt.

This format is specifically OpenAI's format, which you can use for any LLM, because you are using LiteLLM to as your LLM abstraction library.

Add the following code to craft the messages list:

messages = [

{

"role": "system",

"content": input.context_instructions

+ ". The current date is "

+ datetime.now().strftime("%B %d, %Y"),

},

{

"role": "user",

"content": input.prompt,

},

]

The agent_toolPlanner Activity constructs standard OpenAI-format messages with system context and user input.

It automatically includes the current date, which helps the language model provide accurate responses for time-sensitive queries.

Continue the method with the LLM call implementation:

try:

completion_kwargs = {

"model": self.llm_model,

"messages": messages,

"api_key": self.llm_key,

}

# Add base_url if configured

if self.llm_base_url:

completion_kwargs["base_url"] = self.llm_base_url

response = completion(**completion_kwargs)

response_content = response.choices[0].message.content

activity.logger.info(f"LLM response: {response_content}")

# Use the new sanitize function

response_content = self.sanitize_json_response(response_content)

return self.parse_json_response(response_content)

except Exception as e:

activity.logger.error(f"Error in LLM completion: {str(e)}")

raise

This call is wrapped in a try/except statement to handle a potential failure.

It creates a dictionary containing the arguments for calling the LLM, including the model choice, the messages, the API key, and a custom base URL if set.

Next it performs the call to the LLM using the completion function, passing in the arguments dictionary.

It then extracts the message you want from the response content, sanitizes the JSON and returns it as properly parsed JSON upon success.

Upon failure, it will raise an exception.

The complete implementation of agent_toolPlanner is as follows:

@activity.defn

async def agent_toolPlanner(self, input: ToolPromptInput) -> dict:

messages = [

{

"role": "system",

"content": input.context_instructions

+ ". The current date is "

+ datetime.now().strftime("%B %d, %Y"),

},

{

"role": "user",

"content": input.prompt,

},

]

try:

completion_kwargs = {

"model": self.llm_model,

"messages": messages,

"api_key": self.llm_key,

}

# Add base_url if configured

if self.llm_base_url:

completion_kwargs["base_url"] = self.llm_base_url

response = completion(**completion_kwargs)

response_content = response.choices[0].message.content

activity.logger.info(f"LLM response: {response_content}")

# Use the new sanitize function

response_content = self.sanitize_json_response(response_content)

return self.parse_json_response(response_content)

except Exception as e:

activity.logger.error(f"Error in LLM completion: {str(e)}")

raise

Now that you have implemented the Activity to call the LLM, you will implement the Activity to validate the user's prompts.

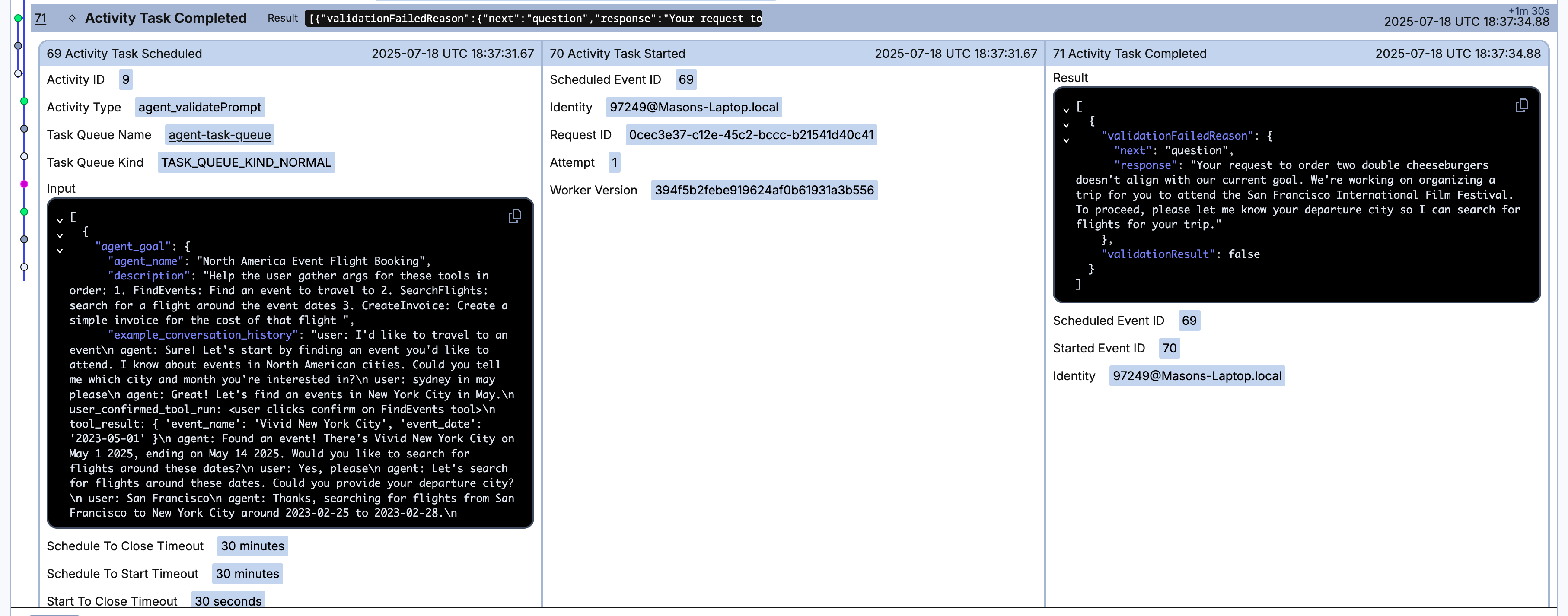

Implementing the Activity for prompt validation

It is important to not let the user take your agent off on a tangent, sending prompts that are not related to the goal. To do this, you must validate the prompt against your agent's goal and context prior to executing the LLM with the user's input.

Next, create the agent_validatePrompt Activity to validate any prompt sent to the LLM in the context of the conversation history and agent goal.

Within the AgentActivities class, add the following method header:

@activity.defn

async def agent_validatePrompt(

self, validation_input: ValidationInput

) -> ValidationResult:

"""

Validates the prompt in the context of the conversation history and agent goal.

Returns a ValidationResult indicating if the prompt makes sense given the context.

"""

This Activity takes in a single argument, using the custom ValidationInput type you specified, and returns a single value, ValidationResult, in accordance with Temporal best practices.

Next, add the code following code to iterate over the tools specified in the agent's goal and add them to a list.

# Create simple context string describing tools and goals

tools_description = []

for tool in validation_input.agent_goal.tools:

tool_str = f"Tool: {tool.name}\n"

tool_str += f"Description: {tool.description}\n"

tool_str += "Arguments: " + ", ".join(

[f"{arg.name} ({arg.type})" for arg in tool.arguments]

)

tools_description.append(tool_str)

tools_str = "\n".join(tools_description)

By doing this, you are creating a string the LLM can use as context to validate against. This context helps the LLM understand what capabilities are available to the agent, and whether or not the prompt the user sent makes sense.

Continue the validation method by adding conversation context:

# Convert conversation history to string

history_str = json.dumps(validation_input.conversation_history, indent=2)

# Create context instructions

context_instructions = f"""The agent goal and tools are as follows:

Description: {validation_input.agent_goal.description}

Available Tools:

{tools_str}

The conversation history to date is:

{history_str}"""

This section gathers the past conversation history and concatenates it with the available tool context, creating a complete context for the LLM.

Next, add the following prompt for the LLM to use to validate the prompt:

# Create validation prompt

validation_prompt = f"""The user's prompt is: "{validation_input.prompt}"

Please validate if this prompt makes sense given the agent goal and conversation history.

If the prompt makes sense toward the goal then validationResult should be true.

If the prompt is wildly nonsensical or makes no sense toward the goal and current conversation history then validationResult should be false.

If the response is low content such as "yes" or "that's right" then the user is probably responding to a previous prompt.

Therefore examine it in the context of the conversation history to determine if it makes sense and return true if it makes sense.

Return ONLY a JSON object with the following structure:

"validationResult": true/false,

"validationFailedReason": "If validationResult is false, provide a clear explanation to the user in the response field

about why their request doesn't make sense in the context and what information they should provide instead.

validationFailedReason should contain JSON in the format

{{

"next": "question",

"response": "[your reason here and a response to get the user back on track with the agent goal]"

}}

If validationResult is true (the prompt makes sense), return an empty dict as its value {{}}"

"""

Finally, instantiate a ToolPromptInput object and pass that to agent_toolPlanner to execute:

# Call the LLM with the validation prompt

prompt_input = ToolPromptInput(

prompt=validation_prompt, context_instructions=context_instructions

)

result = await self.agent_toolPlanner(prompt_input)

return ValidationResult(

validationResult=result.get("validationResult", False),

validationFailedReason=result.get("validationFailedReason", {}),

)

The complete implementation of agent_validatePrompt is as follows:

@activity.defn

async def agent_validatePrompt(

self, validation_input: ValidationInput

) -> ValidationResult:

"""

Validates the prompt in the context of the conversation history and agent goal.

Returns a ValidationResult indicating if the prompt makes sense given the context.

"""

# Create simple context string describing tools and goals

tools_description = []

for tool in validation_input.agent_goal.tools:

tool_str = f"Tool: {tool.name}\n"

tool_str += f"Description: {tool.description}\n"

tool_str += "Arguments: " + ", ".join(

[f"{arg.name} ({arg.type})" for arg in tool.arguments]

)

tools_description.append(tool_str)

tools_str = "\n".join(tools_description)

# Convert conversation history to string

history_str = json.dumps(validation_input.conversation_history, indent=2)

# Create context instructions

context_instructions = f"""The agent goal and tools are as follows:

Description: {validation_input.agent_goal.description}

Available Tools:

{tools_str}

The conversation history to date is:

{history_str}"""

# Create validation prompt

validation_prompt = f"""The user's prompt is: "{validation_input.prompt}"

Please validate if this prompt makes sense given the agent goal and conversation history.

If the prompt makes sense toward the goal then validationResult should be true.

If the prompt is wildly nonsensical or makes no sense toward the goal and current conversation history then validationResult should be false.

If the response is low content such as "yes" or "that's right" then the user is probably responding to a previous prompt.

Therefore examine it in the context of the conversation history to determine if it makes sense and return true if it makes sense.

Return ONLY a JSON object with the following structure:

"validationResult": true/false,

"validationFailedReason": "If validationResult is false, provide a clear explanation to the user in the response field

about why their request doesn't make sense in the context and what information they should provide instead.

validationFailedReason should contain JSON in the format

{{

"next": "question",

"response": "[your reason here and a response to get the user back on track with the agent goal]"

}}

If validationResult is true (the prompt makes sense), return an empty dict as its value {{}}"

"""

# Call the LLM with the validation prompt

prompt_input = ToolPromptInput(

prompt=validation_prompt, context_instructions=context_instructions

)

result = await self.agent_toolPlanner(prompt_input)

return ValidationResult(

validationResult=result.get("validationResult", False),

validationFailedReason=result.get("validationFailedReason", {}),

)

Calling an Activity within another Activity won't invoke that Activity, but will call the method like a typical Python method.

The Activity then returns a ValidationResult for the agent to interpret and continue with its execution.

Implementing the Activity for retrieving environment variables

The final Activity within the AgentActivities class is the get_wf_env_vars Activity.

This Activity reads certain environment variables that need to be known within the Workflow.

Since reading from the file system is a potentially non-deterministic operation, this must happen within an Activity.

Add the following code within the AgentActivities class to implement the Activity:

@activity.defn

async def get_wf_env_vars(self, input: EnvLookupInput) -> EnvLookupOutput:

"""gets env vars for workflow as an activity result so it's deterministic

handles default/None

"""

output: EnvLookupOutput = EnvLookupOutput(

show_confirm=input.show_confirm_default

)

show_confirm_value = os.getenv(input.show_confirm_env_var_name)

if show_confirm_value is None:

output.show_confirm = input.show_confirm_default

elif show_confirm_value is not None and show_confirm_value.lower() == "false":

output.show_confirm = False

else:

output.show_confirm = True

return output

This Activity reads the environment variables and ensures that show_confirm_value is set, returning your custom EnvLookupOutput type.

While this type may only contain one value at the moment, having it designed with this custom type allows you to expand this method later if necessary.

You have implemented all Activities within the AgentActivities class, but there is still one Activity left to implement, the Activity for executing the tools.

Implementing dynamic tool execution

The final Activity enables runtime execution of any tool from your registry. To enable this, you must use Dynamic Activities, which are necessary when you request execution of an Activity with an unknown Activity Type. Since your tools are loaded in dynamically, this is a perfect example of when to use Temporal's Dynamic Activities.

This Activity will not be implemented as a method within the class, but rather a function within the same activities.py file.

Add this function outside the class definition:

@activity.defn(dynamic=True)

async def dynamic_tool_activity(args: Sequence[RawValue]) -> dict:

from tools.tool_registry import get_handler

tool_name = activity.info().activity_type # e.g. "FindEvents"

tool_args = activity.payload_converter().from_payload(args[0].payload, dict)

activity.logger.info(f"Running dynamic tool '{tool_name}' with args: {tool_args}")

# Delegate to the relevant function

handler = get_handler(tool_name)

if inspect.iscoroutinefunction(handler):

result = await handler(tool_args)

else:

result = handler(tool_args)

# Optionally log or augment the result

activity.logger.info(f"Tool '{tool_name}' result: {result}")

return result

This dynamic Activity uses Temporal's runtime information to determine which tool to execute.

It retrieves the tool name from the Activity type and loads arguments from the payload.

It then inspects the handler to determine if the implementation of the tool is an asynchronous Python function. If it is, it awaits its execution, otherwise it directly invokes the function.

This means the Activity handles both synchronous and asynchronous tool functions.

The activities/activities.py is complete and will need no more revisions. You can review the complete file and copy the code here.

Hover your cursor over the code block to reveal the copy-code option.

import inspect

import json

import os

from datetime import datetime

from typing import Sequence

from dotenv import load_dotenv

from litellm import completion

from temporalio import activity

from temporalio.common import RawValue

from models.requests import (

EnvLookupInput,

EnvLookupOutput,

ToolPromptInput,

ValidationInput,

ValidationResult,

)

load_dotenv(override=True)

class AgentActivities:

def __init__(self):

"""Initialize LLM client using LiteLLM."""

self.llm_model = os.environ.get("LLM_MODEL", "openai/gpt-4")

self.llm_key = os.environ.get("LLM_KEY")

self.llm_base_url = os.environ.get("LLM_BASE_URL")

activity.logger.info(

f"Initializing AgentActivities with LLM model: {self.llm_model}"

)

if self.llm_base_url:

activity.logger.info(f"Using custom base URL: {self.llm_base_url}")

@activity.defn

async def agent_toolPlanner(self, input: ToolPromptInput) -> dict:

messages = [

{

"role": "system",

"content": input.context_instructions

+ ". The current date is "

+ datetime.now().strftime("%B %d, %Y"),

},

{

"role": "user",

"content": input.prompt,

},

]

try:

completion_kwargs = {

"model": self.llm_model,

"messages": messages,

"api_key": self.llm_key,

}

# Add base_url if configured

if self.llm_base_url: